Kubernetes Overview

With the widespread adoption of containers among organizations, Kubernetes, the container-centric management software, has become a standard to deploy and operate containerized applications and is one of the most important parts of DevOps.

Originally developed at Google and released as open-source in 2014. Kubernetes builds on 15 years of running Google's containerized workloads and the valuable contributions from the open-source community. Inspired by Google’s internal cluster management system, Borg,

What is Kubernetes?

Kubernetes is an open-source orchestration tool developed by Google for managing micro-services or containerized applications across a distributed cluster of nodes.

K8s as an abbreviation results from counting the eight letters between the "K" and the "s"

Kubernetes provides a highly resilient infrastructure with zero downtime deployment capabilities, automatic rollback, scaling, and self-healing of containers (which consists of auto-placement, auto-restart, auto-replication, and scaling of containers based on CPU usage).

Kubernetes was created from Borg & Omega projects by Google as they use it to orchestrate their data center since 2003.

Google open-sourced Kubernetes at 2014.

What is Orchestration?

To implement certain applications, many containers need to be spinned and managed. To optimize this process, the deployment of these containers can be automated. This is especially beneficial if there is a growth in the number of hosts. This automation process is called orchestration.

What are the benefits of using k8s?

Extensibility:- This is the ability of a tool to allow an extension of its capacity/capabilities without serious infrastructure changes. Users can freely extend and add services. This means users can easily add their own features such as security updates, conduct server hardening or other custom features.

Portability:- In its broadest sense, this means, the ability of an application to be moved from one machine to the other. This means the package can run anywhere. Additionally, you could be running your application on a google cloud computer and later along the way get interested in using IBM Watson services or you use a cluster of Raspberry PI in your backyard. The application-centric nature of Kubernetes allows you to package your app once and enjoy seamless migration from one platform to the other.

Self-healing:-Kubernetes offers application resilience through operations it initiates such as auto start, useful when an app crash, auto-replication of containers and scales automatically depending on traffic. Through service discovery, Kubernetes can learn the health of the application process by evaluating the main process and exit codes among others. Kubernetes healing property allows it to respond effectively.

Load balancing:- Kubernetes optimizes the tasks on demand by making them available and avoids undue strain on the resources. In the context of Kubernetes, we have two types of Load balancers – Internal and external load balancers. The creation of a load balancer is an asynchronous process, information about provisioned load balancer is published in the Service’s status. load balancer.

Famous Container Orchestrator

Docker Swarm

Mesos (Mesos Sphere)

Normand

Cloud Foundry

Cattel

Cloud (Azure, Amazon, Google, Alibaba, IBM)

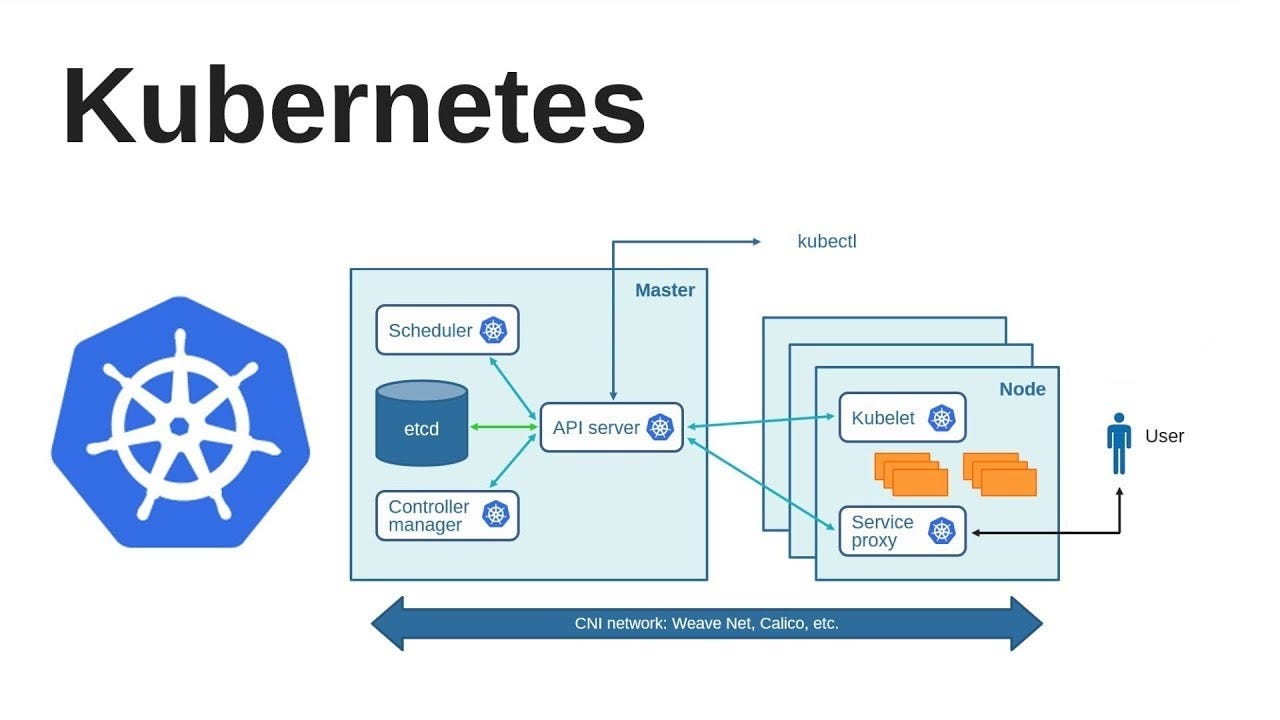

Explain the architecture of Kubernetes

A Kubernetes cluster has two main components:-

Master Nodes are also known as the Control Plane or the manager who controls and manages the whole Kubernetes system.

Worker Nodes also known as the Data Plane where the actual application runs.

Control Plane:-

The control plane is responsible for container orchestration and maintaining the desired state of the cluster. It has the following components.

kube-API server

etcd

kube-scheduler

kube-controller-manager

Data Plane:-

The Data Plane are responsible for running containerized applications. The worker Node has the following components.

kubelet

kube-proxy

Container runtime

The Control Plane / The master/ The manager Components:-

Control plane it consists of multiple components that can run on a single master node or be split across multiple nodes and replicated to ensure high availability.

The main components are:-

API Server:- The Kubernetes API Server, acts like a gateway, through which the end-user and the other Control Plane components communicate with the Kubernetes cluster. It is responsible for orchestrating all operations (scaling, updates, and so on) in the cluster

Scheduler:- The Scheduler, as the name suggests, this schedules the end user's application and selects a worker node for deployment (the scheduler is only responsible for deciding the node, it doesn't actually place the apps (pod) on the nodes).

Controller Manager:- The Controller Manager performs cluster-level functions, such as replicating components, keeping track of worker nodes, handling node failures, and so on. It is having a combination of multiple controllers such as node controllers, which take care of node counts.

Replication-Controller:- A ReplicationController ensures that a specified number of pod replicas are running at any one time. It makes sure that a pod is always up and available.

Node Controller:-- The node controller is a Kubernetes master component that manages various aspects of nodes.

etcd:- etcd is a critical part of Kubernetes. etcd database that stores the state of the cluster, including node and workload information in a key/value format.

The Worker Node/ Data Plane Components:-

Kubelet:- The main service on a node, connecting between Master and Node and ensuring that pods and their containers are healthy and running in the desired state. This component also reports to the master on the health of the host where it is running

kube-proxy:- a proxy service that runs on each worker node to deal with individual host subnetting and expose services to the external world. It performs request forwarding to the correct pods/containers across the various isolated networks in a cluster.

Container runtime:- The container runtime is the software that is responsible for running containers. Kubernetes supports several container runtimes: Docker, containerd , CRI-O

Kubectl:- kubectl command is a line tool that interacts with kube-apiserver and sends commands to the master node. Each command is converted into an API call.

Difference between kubectl and kubelets

Kubectl is the command-line interface used to interact with the Kubernetes API server, which is the control plane component responsible for managing the state of the cluster. With Kubectl, users can deploy, manage, and monitor Kubernetes resources, such as pods, services, and deployments.

On the other hand, kubelet is the agent that runs on each node in the cluster and is responsible for managing the state of the containers running on that node. It communicates with the control plane to receive instructions on which containers to run and how to configure them. The kubelet ensures that the containers are running and healthy, and reports their status back to the control plane.

Explain the role of the API server

The Kubernetes API is the front end of the Kubernetes control plane and is how users interact with their Kubernetes cluster. The API (application programming interface) server determines if a request is valid and then processes it.

In essence, the API is the interface used to manage, create, and configure Kubernetes clusters. It's how the users, external components, and parts of your cluster all communicate with each other.

At the center of the Kubernetes control plane is the API server and the HTTP API that it exposes, allowing you to query and manipulate the state of Kubernetes objects.

Thank you for reading!! I hope you find this article helpful!!

if any queries or corrections to be done to this blog please let me know.

Happy Learning!!

Saikat Mukherjee